Why AI analysis tools need to produce artifacts, not transcripts

TLDR; We've taught Count's AI Agent to build more sophisticated canvas layouts.

We've all been there. You drop in a CSV and ask a question. You get a wall of text back. It looks…good, it's thoughtful—insightful maybe even. It asks if you want the logical followup question answered. Obviously you do. You type "yes". Another wall. Another obvious followup. "Yes".

Most AI analysis tools follow the same loop. You ask a question. You get paragraphs of explanation. The AI offers to "dig deeper" and you say yes—because what else would you say? More paragraphs arrive. You bounce between "yes, obviously" and "keep going" until you've accumulated a transcript that you now have to do something with.

The AI is performing analysis. Narrating what it sees. Offering to narrate more. But the work of turning that performance into something anyone else can use still falls entirely on you.

But it's just narration, and it's exhausting in a specific way that I think we're all starting to realise with LLMs: You're spending your energy on integration work the AI should be doing, while neglecting the interpretation work only you can do.

This is why we all get sent reams of AI slop these days. Or screenshots in Slack with the expectant 👀's of is-this-interesting-or-just-something.

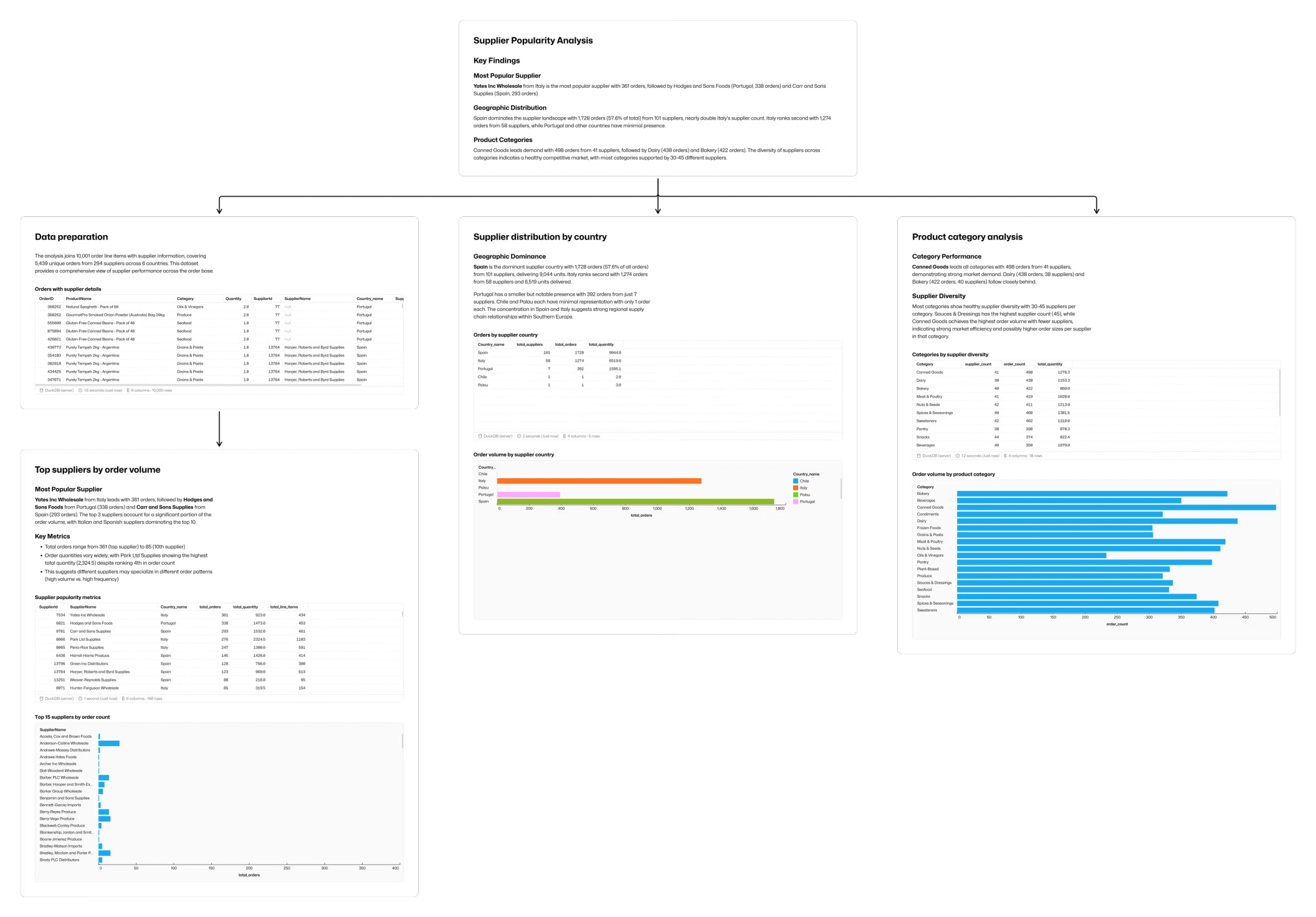

When we launched Count's AI agent, we focused it on building a very specific type of analysis in the canvas. It tackled the user's question, found the hierarchy embedded in the answer, and laid out analysis, visualisations, and tables in an information-rich (fine, let's call it dense) tree.

It did more of the integration-brain-work than we'd seen previous AI tools do, while still giving you the flexibility to adjust, reframe, and refocus.

The first moment it truly clicked for me was when I remembered that every object it made was just like every object I could make…just…already made for me. I plucked the bits that interested me out, dragged them across the canvas, and extended them to tell the story I needed to tell.

It cut down my manual effort in the same way that our Count Metric catalogs previously removed much of the SQL I write on a daily basis.

Workflows like this are where we saw the agent having a transformative impact on analysts and non-analysts alike. It also reflected a foundational truth in what we were building:

When the AI's job is to produce an artifact—not explain one—the interaction fundamentally shifts. You're no longer processing a stream of text, deciding what to retain and what to let pass. You're looking at a thing and reacting to it as a whole. Move this. Cut that. Break this out by region. Why is this metric here?

This is how people actually work. We iterate on objects, not transcripts. We think by manipulating things in front of us, not by reading linear accounts of what those things might eventually look like.

This week we've expanded the agent's capabilities so it can produce other types of foundational output: reports, presentations, even dashboards.

These aren't just different formats. They're artifacts designed to travel—to bring people together around analysis rather than leaving them to synthesise it alone.

That matters because an analysis doesn't succeed by being correct. It succeeds by getting adopted: believed, circulated, referenced when decisions get made. The graveyard of corporate life is filled with analyses that were right and didn't matter. They died with their methodology intact and their stakeholders unmoved. What they lacked wasn't rigour. It was an object that people could gather around, react to, extend.

Much of the industry has converged on the pitch that "everyone is an analyst now." Democratizing. Empowering. Tidily vague about what happens next. But many people having parallel private conversations with data doesn't produce shared understanding. It produces noise. More charts, less alignment. Everyone has insights; no one has agreement.

The dashboard, the report, the presentation—these become focal points. People argue about what's there and what's missing. They extend them in directions you hadn't considered. The analysis becomes a conversation, with a shared object at the center.

That's what "everyone can use data" actually looks like. Not everyone doing analysis in private, but everyone able to engage with an artifact legible enough to react to. It circulates. It accumulates context. It becomes something the organization thinks with, together.

Everything the agent builds is still auditable, editable, and—thanks to our compute layer—more accurate and less error-prone than AI tools that guess at what analysis looks like rather than computing it.

That's when analysis stops being noise and starts being decision. And that shift has nothing to do with AI getting smarter. It has everything to do with giving humans back the work that was always theirs.