Playbook: Driving value through product experimentation

Ever since we decided to focus the next batch of articles on how data teams can drive organizational value, I’ve been excited to talk about product experimentation.

Product experimentation sits at the crossroads between data, engineering, and the wider business - it requires a solid statistical foundation, a good understanding of your product and users, and teamwork and communication across many functions. As such it is a natural domain for data teams to own, and in doing so, it can be a powerful way to communicate the undeniable value of the data team.

For this playbook, I’ll be diving into the experimentation processes that underpin how product-led data teams ensure their organizations do product experimentation well, and how that process has transformed how the data team is valued in the larger organization.

Thanks to the following folks who were willing to share their stories and give some feedback!

- , Head of Data at Substack

- , Head of Product Analytics at

- , Analytics Lead at Cleo AI

- , Head of Data Platform & Product Analytics at Urban Sports Club

Where experimentation goes wrong

“Shipping experiments willy nilly without thinking them through could lead to some really terrible outcomes - both in the product and within the organization.” - Mike Cohen, Head of Data at Substack

While rapid product experimentation is the drumbeat behind many product-led organizations, it is far from a flawless process. Without certain guardrails and processes, experimentation is prone to:

- Erroneous interpretation of results → incorrect conclusions and next steps

- Poor experiment designs → little or no significant results

- Poor communication of ongoing and previous experiments → duplicate and overlapping tests

- Lack of trust in experiments → people trust their instincts over the experiment results

Not all motivations for improving experimentation have to be to avoid poor results. Callum Ballard, Analytics Lead at Cleo AI, had a more positive reason for revamping his team’s experimentation frameworks:

“I could see that each of Cleo’s squads were each individually doing great things in the experimentation space. However, many of these good practices were not universal across the business.” -Callum Ballard, Analytics Lead @ Cleo AI

Given how directly rapid experimentation affects many companies’ bottom lines, it is essential to have a trusted and reliable experimentation process.

Where the data team comes in

The data teams at Substack and Vio.com have taken on the responsibility of owning and maintaining the company’s experimentation process. This means they own:

- Thethat all teams follow when doing experimentations. Including:

- Test documentation

- How test documents can be easily found at a later date

- Theof all employees in how this process works, and the statistical principles that underpin the process

Part 1: Owning the Process

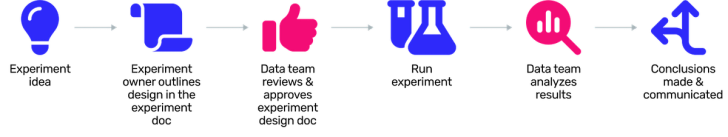

When Vio.com and Substack took ownership of their organization’s design process, they needed something both robust, but also approachable enough to be used. Remarkably, both teams came up with very similar experiment processes:

We’ll walk through a few of the key steps:

1. A formalized document for each experiment

“It sounds like a crushingly boring point, but improved test documentation has been such a quality-of-life win.” - Callum Ballard

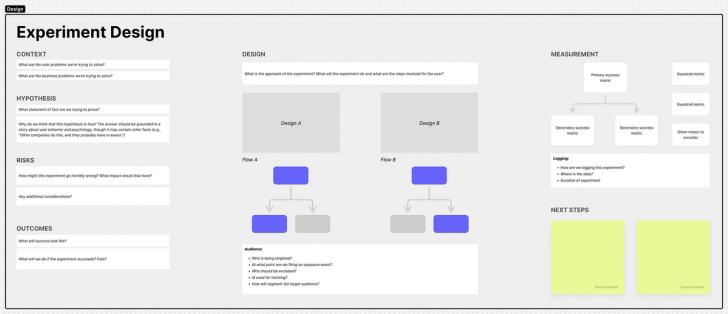

The first thing all of these data leaders did was to create a formal document to be filled out at the start of each experiment. This form must be filled out by the project owner (often the PM, but sometimes the analyst).

This form includes:

- The context of this experiment (why are we running it?)

- The hypothesis (what do we think will happen?)

- The risks (what could go wrong?)

- The design (how will it work - who will receive it and when?)

- The measurement (how will we know if it succeeds or fails?)

- The outcomes (what will happen if it succeeds/fails?)

2. The experiment doc goes through approval

Add a specific review step to the process was beneficial for a few reasons:

- it made sure the experiment was set up for success before anything began (and avoided many downstream errors)

- it made sure the official document didn’t become something that got skipped over

- it encouraged collaboration between teams

Integrating a review step made sure that the documentation process didn’t just become another thing that got skipped over. Holding one or multiple people accountable for the quality of that document ensured it would stay relevant, and that the experiment was best set up for success.

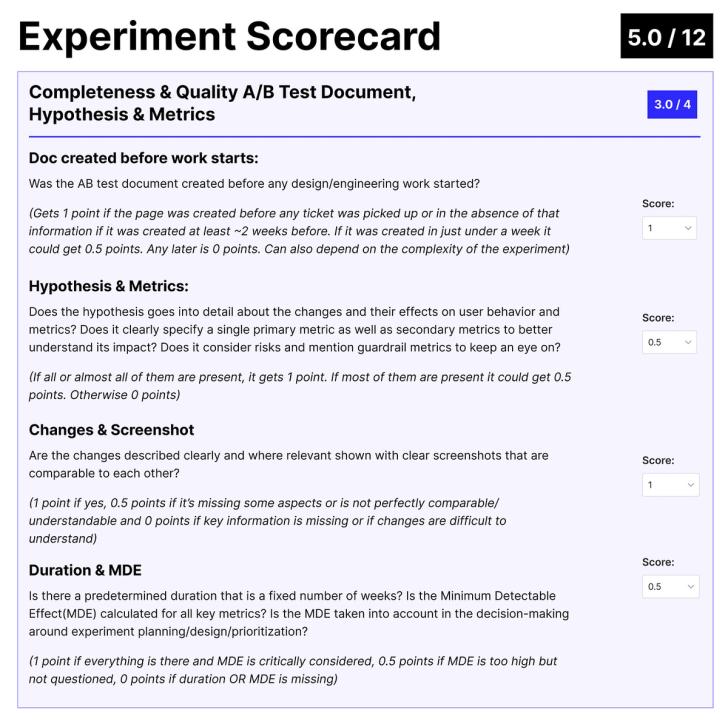

The approval process consists of:

- Assigning reviewers and approvers

- Completing a scorecard (or some kind of scoring system)

Reviewers consist of:

- 1 member of the data team

- (optionally) 1 member of the engineering team

You can also use the review step to promote cross-team collaboration:

“Another benefit of the experiment doc is that you can solict cross-team collaboration a bit more easily. So rather than being siloed within your team, you can solicit someone from another team that has a lot more experimentation experience to be like ‘Hey, could you take a look at this doc?’” - Mike Cohen

There is also one single approver. This is almost always someone on the data team.

Omer and his data team also implement a scorecard when reviewing experiment docs. This gives a transparent way to communicate what a good experiment doc looks like. The goal isn’t necessarily perfection though:

“We never hit 100%. It’s about the intentions and making sure we’re setting outselves up for success.” - Omer Sami

Specifically, the goals of the scorecard are:

- To measure & understand how the company is doing with experimentation and identify areas to improve

- To give more guidance & feedback to teams on specific experiments to improve experiment design

- To understand what kind of experiments are more likely to succeed, to help with product prioritization

The scorecard stays in the same tool as the official experiment document.

3. Consistent and robust analytics

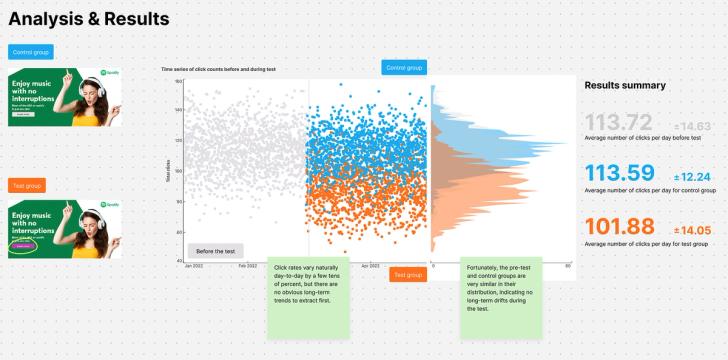

Once the experiment has concluded, it is the responsibility of the data team to report on the results of that test. This could be done by creating dashboards or reports in a BI tool, where results will need to be screenshot and placed in the experiment doc. [To avoid the manual screenshot process, see how you can run experiments in the Count canvas here.]

:Artur Yatsenko acknowledges the importance of timing when it comes to delivering experiment results. Delivering too early can lead to ‘peeking’ and thus, acting on premature conclusions. Be aware of the potential impacts of when and how you decide to deliver the statistical results.

4. Conclusions that are visible and searchable

When these results are in, the experiment team can decide how they are going to interpret them, and what the next steps will be.

A key part of this process is the ability to find old experiments to look back on later:

“It’s nice to have these old experiments to look back on. Somewhat recently someone was like ‘Oh we want to try X’ and I had a vague recollection of having done something about that because I had read an experiment doc about it at some point and I was able to find it and share it with that person to avoid duplicating the same work.” - Mike Cohen

This can be done in Google Docs, Confluence, Notion, or similar document management, just somewhere easily searched and indexed by everyone in the company. Ideally, you use a tool that combines context, planning, results, and conclusions in one.

Part 2: Training

“Given the importance of experimentation in our product development cycle, it makes no sense for the technical knowledge of how our testing analysis actually works to be confined to the product analysts alone.” -Callum Ballard

To enact longer-lasting change, the data teams also took responsibility for educating and training the rest of the organization about product experimentation.

This training covered practical things like how the experimentation process works, and why to do AB testing at all, to more advanced topics like an introduction to Bayesian statistics.

This training was delivered through a combination of:

- in-person training sessions

- weekly test and learn sessions

- readily accessible training materials for newcomers (and anyone wanting a refresher)

The results

What have been the results of the data team taking ownership of the company’s experimentation framework?

Slowing down to move faster

One major difference is that by asking people to go through this process they have to slow down. They can no longer start tinkering and building without thinking things through. Ultimately this has led to better results.

Better organizational numerical literacy

Through increased exposure to statistical concepts via the experimentation process, many business partners have found increased confidence in using data and analytics more broadly. This has led to an improved organizational data literacy that is felt in all other areas of their work, not just experiments.

Results are more trusted

Having a consistent process that everyone in the business follows to do experiments has led to far more trust in the experiment process, including trusting experiments that someone had no exposure to. For example, if someone from the product team reviews the results of a growth team experiment since they know they followed the same process, they are far more likely to understand and trust the results of that experiment.

The data team is delivering clear value

By orchestrating such an important organizational process (experimentation), both Omer and Mike’s data teams have a clear proof of value to the larger organization. They are seen as pivotal members of the organization.

Better communication and collaboration between parts of the business

An unexpected benefit of the experimentation process is how nicely a batch an experiment doc is packaged up like a story. These stories are easily shared to showcase what a team has accomplished over a week or a quarter, especially when sharing with other teams who are less familiar with their day-to-day activities.

Moreover, having a consistent framework makes it easy for one team to ask for input and advice on an experiment from another.

Tips for implementing an experimentation framework…tomorrow

What advice would these data leaders offer for data teams that are going to try to set up and run a product experimentation process?

- Don’t over-engineer the process. It needs to be useful but it also can’t be so complicated that no one completes it.

- Get buy-in. To do this well, you’re going to need all the contributing teams to be bought into this process and the value it can generate. This means meeting with Product, Growth, Engineering, Design, and others to make sure they are all in agreement.

- Be ready to hold the line.

“It’s hard to get people excited about writing documentation. It’s been challenging to stop people from jumping right into writing code and launching an experiment. But you have to be the ones holding the line and saying ‘hey where’s the experiment doc for that?’ - Mike Cohen

- Have a central place to talk about experiments.

“We have a Slack channel called #experiments where people post their experiment docs. This is how we make sure everyone in the company can see ongoing and finished experiments, and discuss them. It’s really visible to everyone who wants to see it.” - Mike Cohen

Resources

- by Callum Ballard.

- . Made by Author.

Thanks again for reading!